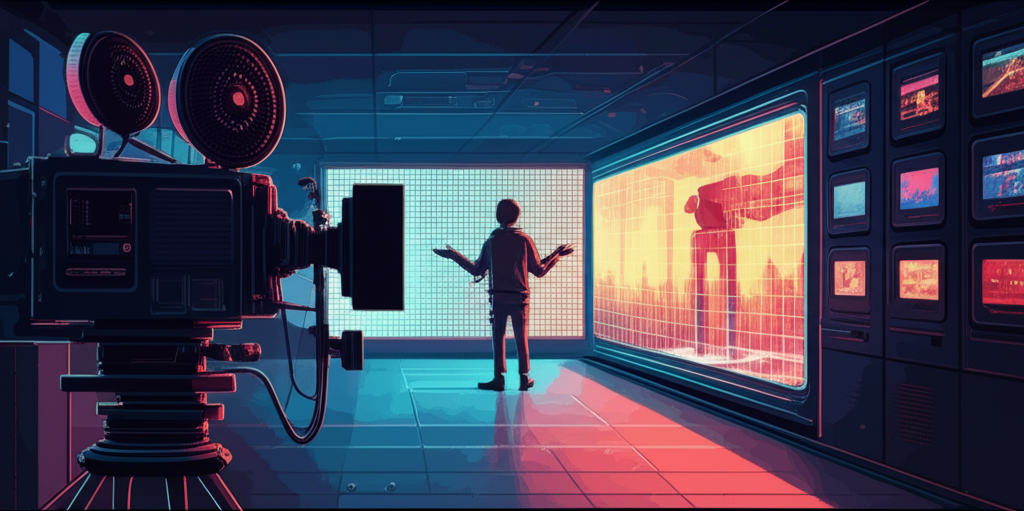

Imagine conjuring cinematic scenes from mere words, breathing life into your most outlandish ideas with a few keystrokes. That’s the promise, the siren song, of Text-to-Video (T2V) technology. Type a description, any description, and watch as the AI weaves pixels into motion, transforming prose into moving pictures. It’s a digital alchemy that has the tech world buzzing, the creative industries intrigued, and raises questions that strike at the heart of art, ownership, and truth itself.

But let’s not mistake this for a bolt from the blue. This “overnight sensation” has been simmering for decades, a slow burn of computational creativity.

The “How Did We Get Here?” Story: A Brief History of Pixels & Prose

The journey from rudimentary animation algorithms to sophisticated AI models capable of generating video from text is a fascinating one. Back in the day, the idea of converting text to visuals existed, but it was more about basic scene assembly, the equivalent of digital dioramas.

The true catalyst was the AI revolution, specifically the emergence of generative AI and large language models. These models, fed on colossal datasets of images and text, learned the intricate dance between language and visuals. This wasn’t just about recognizing objects; it was about understanding context, relationships, and the very essence of visual storytelling. We went from assembling static scenes to choreographing digital ballets. Each leap in coherence, fidelity, and the sheer length of generated video was a “whoa!” moment, a signpost on the road to this brave new world.

Today’s Buzz: Love It or Loathe It? The Current Vibe Around T2V

The hype surrounding T2V is palpable, a contagious enthusiasm spreading through various sectors. Content creators, filmmakers, marketers, and even social media enthusiasts are starting to see T2V as a powerful tool, not just for quick content creation but also for visualizing ideas and prototyping concepts. The technology also carries the allure of democratizing video creation, empowering those who lack the traditional skills and equipment to bring their visions to life.

However, skepticism lingers in the shadows. The “uncanny valley” effect rears its head, where the near-perfection of AI-generated content can feel unsettling and artificial. Concerns about a loss of control are voiced, questioning the ability to fine-tune outputs and maintain a unique artistic voice. The fear of job displacement looms, with animators, editors, and filmmakers wondering if their roles will be usurped by algorithms. The public’s reaction is a mixture of awe, confusion, and cautious anticipation, a collective wait-and-see approach.

The Elephant in the Room: Deepfakes, Dilemmas, & Digital Drama

The potential of T2V is inextricably linked to the specter of deepfakes. The ability to generate highly realistic, yet entirely fabricated footage, opens a Pandora’s Box of ethical and societal concerns. Misinformation and propaganda take on a new level of believability, threatening to erode trust and destabilize societies.

The question of copyright further complicates the landscape. If copyrighted materials are used to train AI models without permission, who is liable? Who owns the videos generated by AI? The user? The AI company? Or does it reside in the public domain?

These debates lead to an ethical minefield. Can someone be “filmed” by AI without their knowledge or consent, their likeness manipulated and exploited in ways they never imagined? If the training data used to build the AI is biased, will the AI’s outputs only amplify these biases, perpetuating harmful stereotypes and inequalities? Experts, lawmakers, and the public are grappling with how to regulate this technology responsibly, a task that feels like threading a needle in a hurricane.

Gazing into the Crystal Ball: What’s Next for Text-to-Video?

Looking ahead, the trajectory of T2V points toward hyper-realistic outputs, higher resolutions, and longer, more complex narratives. We can anticipate more nuanced control over specific elements – character emotions, camera angles, lighting, and visual styles – allowing creators to achieve their vision with unprecedented precision.

T2V may become integrated with other AI-powered tools to create full, AI-generated multimedia experiences. Imagine seamless integration with text-to-speech, AI music generation, and 3D modeling, blurring the lines between reality and imagination.

The potential applications of T2V are vast and varied. Personalized interactive storytelling, virtual tourism, rapid game development, and architectural visualization are just a few possibilities. The development of watermarking AI-generated content, the establishment of ethical guidelines, and the prioritization of transparency are crucial steps towards responsible AI development.

Conclusion: The Journey Has Just Begun

Text-to-Video technology stands at the cusp of revolutionizing how we create and consume visual media, a fundamental shift with profound implications. The journey has just begun. It’s up to us, the creators, the consumers, and the custodians of this technology, to shape its future responsibly. What role will we play in this unfolding drama?